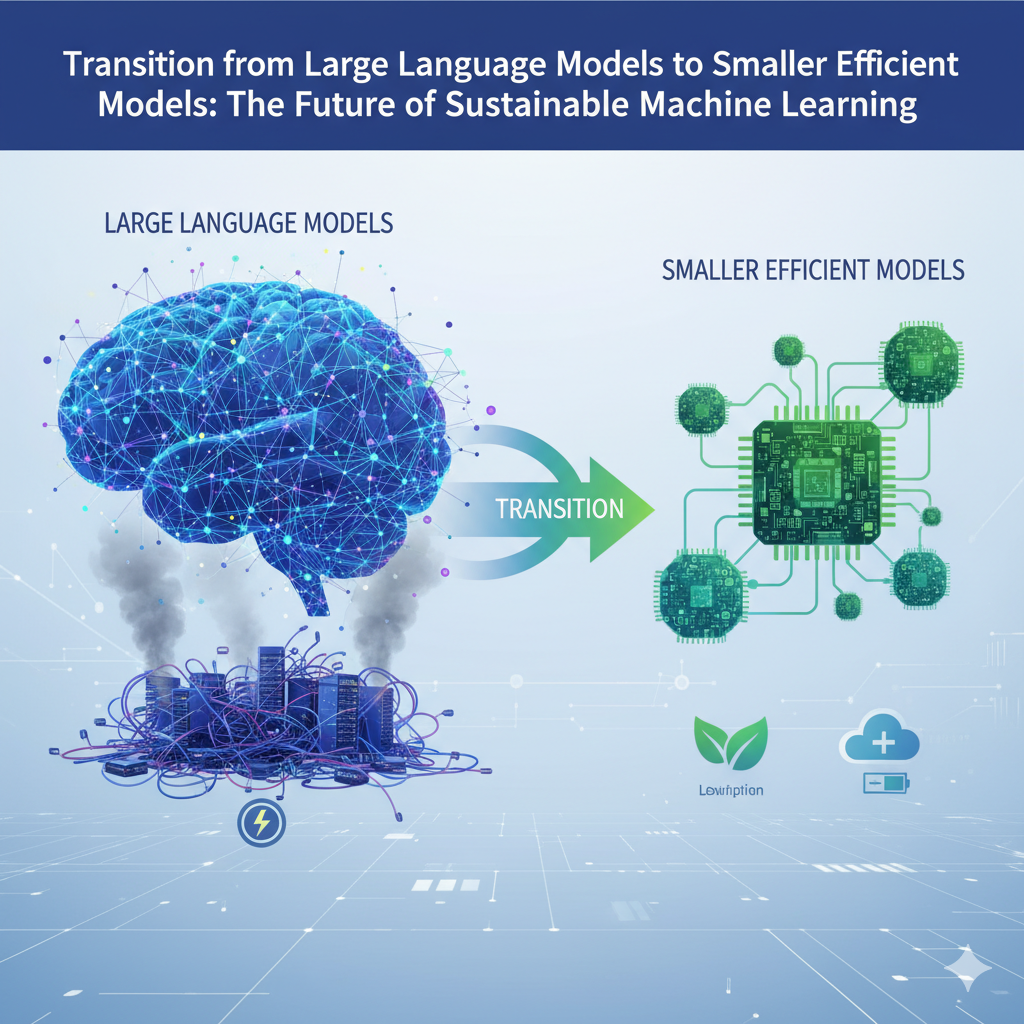

Transition from Large Language Models to Smaller Efficient Models: The Future of Sustainable Machine Learning

In the early days of AI, size was everything. The bigger the model, the smarter it seemed. OpenAI’s GPT-3, Google’s PaLM, and Meta’s LLaMA set new benchmarks by pushing scale to its limits. But as enterprises began deploying these models in real world environments, a new challenge surfaced sustainability.

Training and running these massive systems require enormous energy, infrastructure, and cost often inaccessible for most businesses. This realization has set the stage for a new era of smaller, efficient models that deliver comparable intelligence with far fewer resources.

So, what does this shift mean for the future of machine learning and why should business leaders pay attention?

The Cost of Going Big

Large Language Models (LLMs) are undeniably powerful. They can generate code, write content, analyze sentiment, and even reason across domains. Yet, they come at a price:

- Massive compute requirements: Training a large model can consume as much energy as powering hundreds of homes for months

- High latency: Running large models in production introduces delays that impact user experience.

- Data center dependence: Constant reliance on GPU clusters drives up operational costs.

- Limited accessibility: Only a handful of companies can afford to train or deploy models of this scale.

This imbalance has driven researchers and companies toward a more sustainable goal efficiency without compromise.

The Rise of Smaller, Smarter Models

The future is not necessarily bigger it’s better optimized. Techniques like quantization, knowledge distillation, and parameter pruning have made it possible to create compact models that perform nearly as well as their large counterparts.

For instance, Google’s MobileBERT and OpenAI’s GPT 4 turbo demonstrate that you can achieve strong results with reduced model sizes. Similarly, companies like ElevateTrust.AI are pioneering enterprise grade AI solutions that prioritize both accuracy and efficiency.

Here’s why small models are gaining momentum:

- Lower carbon footprint: Energy efficient training and inference reduce environmental impact.

- Edge deployment: Models can run on local servers or even mobile devices without needing constant cloud access.

- Faster performance: Smaller architectures enable real-time responses, critical for business workflows.

- Custom training: Compact models can be fine tuned for domain specific use cases with less data and cost.

Why Enterprises Are Making the Shift

Enterprises adopting AI are discovering that agility often trumps brute force. Whether it’s automating business operations, analyzing customer behavior, or enhancing product experiences, smaller AI models offer scalability without the baggage of large scale infrastructure.

Consider the growing need for on device intelligence in manufacturing, healthcare, and retail. Here, smaller models provide data privacy and compliance advantages, as sensitive information doesn’t need to leave the local environment.

At ElevateTrust.AI’s AI-ML Solutions, teams help businesses deploy optimized AI models that balance speed, accuracy, and cost efficiency. The focus is not just on power but on purpose-built intelligence designed for real-world impact.

Real-World Applications of Efficient AI Models

Smaller models are unlocking new frontiers in AI driven transformation:

- Generative AI for Content and Media

Using compact generative models, companies can automate marketing copy, visuals, and insights at scale. Explore Generative AI solutions to see how tailored systems can enhance creativity while minimizing resource use. - Audio and Video Analytics

Intelligent monitoring systems powered by efficient models can process streams in real time, improving safety and quality assurance. Learn more at Audio and Video Analytics. - Cloud-Native Deployments

With Cloud Deployment, businesses can integrate optimized AI workflows that scale dynamically without overloading infrastructure. - IT and Security Solutions

Smaller models strengthen threat detection, predictive maintenance, and system automation. Check out IT Solutions for enterprise-grade implementations. - Smart Attendance Systems

A great example of practical efficiency is CamEdge Attendance System, which uses lightweight vision AI to deliver accurate face recognition with minimal compute requirements.

These solutions highlight a clear trend: sustainable AI is not a downgrade — it’s an evolution.

How to Get Started

Transitioning to efficient AI models starts with understanding your goals and infrastructure limits. Here’s a simple framework to begin:

- Assess your current workloads. Identify where large models are slowing down operations or consuming excess resources.

- Adopt model compression techniques. Use quantization or distillation to reduce size without major accuracy loss.

- Experiment with edge inference. Deploy smaller models closer to where data is generated for faster results.

- Partner with AI experts. Collaborate with trusted solution providers like ElevateTrust.AI to ensure the transition aligns with your long-term business strategy.

If you’re uncertain about where to start, you can also book a demo to explore scalable AI deployments tailored for your use case.

The Road Ahead: Sustainable AI at Scale

The industry’s next breakthrough won’t come from another trillion parameter model. It will come from intelligent, adaptable, and efficient systems that empower businesses to innovate responsibly. Smaller models represent this vision where AI isn’t just powerful, but also practical, sustainable, and accessible.

Enterprises that adopt this mindset early will lead the next generation of digital transformation faster, leaner, and smarter.?

Check out ElevateTrust.AI to explore trusted, secure, and scalable AI for your enterprise.